LM Studio overview with running on the local system.

To upgrade and install the kagent custom resource definitions (CRDs), you can execute the following command in your terminal:

> helm upgrade --install kagent-crds oci://ghcr.io/kagent-dev/kagent/helm/kagent-crds \ --namespace kagent --create-namespace

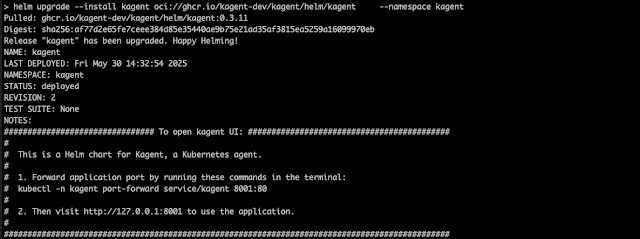

Next, to install the kagent itself, run the following command:

>helm upgrade --install kagent oci://ghcr.io/kagent-dev/kagent/helm/kagent --namespace kagent

To verify that the installation was successful, you can check the model configurations by executing the following command:

>kubectl get mc -n kagent

To get more detailed information about the model configuration, you can run the following command:

> kubectl get mc -n kagent -oyaml

This will return output similar to the following:

apiVersion: v1

items:

- apiVersion: kagent.dev/v1alpha1

kind: ModelConfig

metadata:

annotations:

meta.helm.sh/release-name: kagent

meta.helm.sh/release-namespace: kagent

creationTimestamp: "2025-05-29T15:08:00Z"

generation: 8

labels:

app.kubernetes.io/instance: kagent

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: kagent

app.kubernetes.io/version: 0.3.11

helm.sh/chart: kagent-0.3.11

name: default-model-config

namespace: kagent

resourceVersion: "26472"

uid: a2c8db4e-88a5-4ecd-8e1b-73dba9c93ac2

spec:

apiKeySecretKey: OPENAI_API_KEY

apiKeySecretRef: kagent-openai

model: gemma-3-1b-it-qat

modelInfo:

family: unknown

functionCalling: true

openAI:

baseUrl: http://192.168.1.33:1234/v1

provider: OpenAI

Once you have verified the configurations, you can proceed to browse the dashboard.

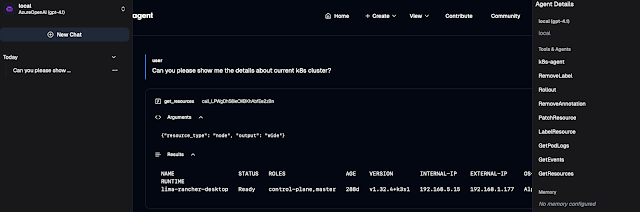

Here, you will see the default dashboard that comes with some built-in agents, providing you with a quick overview of the system's status and functionality.

If you wish to create a new agent, you can do so using the interface provided in the dashboard.

Try out the new agent and parallel see the logs for local LLM Setup

Now, let's transition from a local setup to utilizing Azure OpenAI, which represents a significant step forward in our development process. In order to make this transition successful, we need to update the model configuration to ensure that it is fully compatible with Azure OpenAI's requirements and capabilities.

Following this configuration update, our next step will be to create a new agent specifically designed for this environment. We will then proceed to extract some information using this agent. It is crucial to assess the accuracy of the information retrieved, and we have high expectations in this regard. We anticipate that the accuracy will be notably high, especially considering that we are now working with a fully trained model, which is a significant improvement over the local version that was previously used for testing purposes. 😆