Overview

Now a days in the fast moving and changing world, we need a CI/CD platform which we can scale in/out as per requirements. This auto scaling feature help us to be cost effective and don't need to worry about the changing demands.

In this article will go through the implementation of same kind of setup. Here we'll create Jenkins setup which will be on Kubernetes and scale automatically as per needs.

Interesting thing is that for this demo you don't need any specific inhouse or cloud platform. We are going to do this exercise using Docker Desktop. I have done this on Windows, but if you can use this for Mac as well.

Just want to summarize the tools, not going in deapth as assuming that most of the guys are aware on these tool sets. Otherwise suggest to follow documentation.

Jenkins -

Jenkins is the leading open source automation server, Jenkins provides

hundreds of plugins to support building, deploying and automating

any project. We can install Jenkins using native system packages, container platform like docker or can be run standalone on any machine with a JRE installed.

Kubernetes -

Kubernetes is an open source container orchestration platform. This project is being managed by

CNCF .

Final Setup -

Below is the final setup that we are going to build. In this setup we initially we'll be having one Master Pod running and slaves will be provisioned dynamically based on the requirements.

- This will help us "Not to worry about adding extra node, when demands gets increased".

- New slave node will be added dynamically and the jobs be executed on new pods. After job execution pods will get terminated.

Setup Requirements-

To complete this guide, you will need the following:

- Docker Desktop - Used Docker Desktop on my local system, but this can be done on high capacity on-prem servers or any cloud based solutions.

- Docker Hub account: We will need an account with a container image repository to push the custom images for our Jenkins master and agents.

- kubectl - A cli tool to connect to local kubernetes cluster.

Lets Build Docker Images for Jenkins

Let’s start by building docker images for our Jenkins components and then push them to

Docker Hub.

After instaled the "Docker Desktop" and exposing that on "localhost", you will able to able to run all docker commands.

Once everything is in place, lets start with creating

Dockerfiles.

Dockerfile for Jenkins Master -

We can begin by creating a file called

Dockerfile in "master" folder in the current directory to define the Jenkins master image:

FROM jenkins/jenkins:lts

# Plugins for better UX (not mandatory)

RUN /usr/local/bin/install-plugins.sh ansicolor

RUN /usr/local/bin/install-plugins.sh greenballs

# Plugin for scaling Jenkins agents

RUN /usr/local/bin/install-plugins.sh kubernetes

USER jenkins

Dockerfile for Jenkins Agent -

Now lets create two new folder "slave1" and "slave2" for demonstrating how jenkins can identify the correct agent for different jobs.

Create an empty uniq file in the each directory. We will copy this to the image as an identifier for each agent we are building:

Slave1 -

FROM jenkins/jnlp-slave

# For testing purpose only

COPY empty-test-file /jenkins-slave1

ENTRYPOINT ["jenkins-slave"]

Slave2 -

FROM jenkins/jnlp-slave

# For testing purpose only

COPY empty-test-file /jenkins-slave2

ENTRYPOINT ["jenkins-slave"]

Lets Build docker Images and Push those to Docker Hub

Note: In the command below, replace

$

docker build -t deepforu47/jenkins-master .

From "slave1" folder -

$

docker build -t deepforu47/jenkins-slave-jnlp1 .

From "slave2" folder -

$

docker build -t deepforu47/jenkins-slave-jnlp2 .

Log in to Docker Hub(My case picked existing credentials, but it will ask you for username and password) -

$ docker login

Authenticating with existing credentials...

Login Succeeded

Now, push the image to your Docker Hub account:

Note: In the command below, be sure to substitute your own Docker Hub account again.

$ docker push deepforu47/jenkins-master

$ docker push deepforu47/jenkins-slave-jnlp1

$ docker push deepforu47/jenkins-slave-jnlp2

Below is the screenshot from my dockerhub account -

Deploying Jenkins to the Local Docker Desktop Cluster -

Now lets first create a k8s deployment entity for deploying jenkins master node.

Deployment.yaml

Note: Make sure to change

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: jenkins

spec:

replicas: 1

template:

metadata:

labels:

app: jenkins

spec:

containers:

- name: jenkins

image: deepforu47/jenkins-master

env:

- name: JAVA_OPTS

value: -Djenkins.install.runSetupWizard=false

ports:

- name: http-port

containerPort: 8080

- name: jnlp-port

containerPort: 50000

volumeMounts:

- name: jenkins-home

mountPath: /var/jenkins_home

volumes:

- name: jenkins-home

emptyDir: {}

Now, lets create k8s services, one to access Jenkins and second for the internal communication between jenkins master and agent nodes.

Service.yaml

apiVersion: v1

kind: Service

metadata:

name: jenkins

spec:

type: ClusterIP

ports:

- port: 80

targetPort: 8080

selector:

app: jenkins

---

apiVersion: v1

kind: Service

metadata:

name: jenkins-jnlp

spec:

type: ClusterIP

ports:

- port: 50000

targetPort: 50000

selector:

app: jenkins

Now lets deploy these manifiest to cluster with below commands-

$

kubectl apply -f deployment.yaml

$

kubectl apply -f service.yaml

After this below is what you will get after queries kubernetes for pod and services.

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

jenkins-6c94659b89-dsbxx 1/1 Running 1 3d

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

jenkins ClusterIP 10.106.79.175 80/TCP 3d

jenkins-jnlp ClusterIP 10.97.51.149 50000/TCP 3d

kubernetes ClusterIP 10.96.0.1 443/TCP 3d

Expose the jenkins service-

Now, lets expose the jenkins service and try to access jenkins locally.

$ kubectl port-forward svc/jenkins 80:80

Forwarding from 127.0.0.1:80 -> 8080

Forwarding from [::1]:80 -> 8080

Handling connection for 80

Handling connection for 80

Handling connection for 80

Enable the Jenkins Slaves Autoscaling-

Now, configure jenkins with kubernetes cloud plugin so that it can spin up new jenkins slave pod and scale automatically .

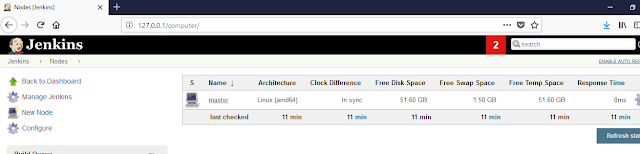

First of disable default master agent by going to "Manage Jenkins" --> "Manage Nodes" and then remove the all agent other than "Master".

Finally it will be like below -

After this change the number of executor to 0 by clicking on configure gear.

Configure Jenkins Kubernetes plugins-

Now install "kubernetes" plugin by going to "Manage Jenkins" --> "Manage Plugins". Search for "Kubernetes Plugin" in available and install.

Now, configure the kubenetes plugin. Go to "Manage Jenkins" --> "Configure System". Scroll down to cloud section and "Add a new cloud" and select "Kubernetes".

Go to the

Images section, click the

Add Pod Template button, and select

Kubernetes Pod Template. Fill out the

Name and

Labels

fields with unique values to identify your first agent.

We will use

the label to specify which agent image should be used to run each build.

Next, in the

Containers field, click the

Add Container button and select

Container Template. In the section that appears, fill out the following fields:

- Name:

jnlp (this is required by the Jenkins agent)

- Docker image:

deepforu47/jenkins-slave-jnlp1 (make sure to change the Docker Hub username)

- Command to run: Delete the value here

- Arguments to pass to the command: Delete the value here

Follow the same steps for second template -

Test the dynamic build job-

Now, create two new jobs. Make sure to update the Label Expression field, type

the label you set for first and second Jenkins agent image.

Now, try to build these jobs and you will be the new slave pods created. After jobs finished, these newly created pods will get terminated automatically.

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

jenkins-6c94659b89-dsbxx 1/1 Running 1 3d

$ kubectl get pod -w

NAME READY STATUS RESTARTS AGE

jenkins-6c94659b89-dsbxx 1/1 Running 1 3d

jenkins-slave-jnlp1-jjkwh 1/1 Running 0 7s

jenkins-slave-jnlp2-1fpkf 1/1 Running 0 7s

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

jenkins-6c94659b89-dsbxx 1/1 Running 1 3d

jenkins-slave-jnlp1-jjkwh 1/1 Terminating 0 15s

jenkins-slave-jnlp2-1fpkf 1/1 Running 0 15s

$ kubectl get pod

NAME READY STATUS RESTARTS AGE

jenkins-6c94659b89-dsbxx 1/1 Running 1 3d